Humanity’s shiny new toy, generative AI like ChatGPT, has shown to be very powerful at interpreting users’ natural language expressions to quickly produce a wide range of text responses, from copy-editing text to summarizing a dialog. While AI responses sound quite confident, we have gradually discovered the flaws in those responses. For example, I asked ChatGPT, “Who invented IBM System U and later known as IBM Personality Insights?” It gave me the following response with names of two people, who may or may not even exist. This phenomenon is referred to as “AI hallucination.”

But are AI hallucinations all bad?

Before answering, let’s take a quick look at what causes AI hallucinations. In essence, language-based generative AI, the technology behind tools like ChatGPT, learns language patterns and structures from its training data and then generates new content with similar patterns and structures. If the training data or learning process is inadequate or flawed, inaccurate content, such as what we see above, is generated. In the above example, ChatGPT most likely does not have training data on the inventors of IBM System U or Watson Personality Insights, so it manufactured the answer by piecing together Dr. Talbot and Dr. Aric, and IBM System U based on a probability.

Although ChatGPT made a mistake in piecing together irrelevant information, its abilities of applying what it has learned from its training data to synthesize new information is somewhat related to humans’ capabilities of “learning by analogy” or “applying a solution of one problem to another situation.” These unique cognitive abilities are what set humans apart from other animals.

As machines gain more and more advanced human cognitive skills, such as drawing proper parallels and reading between the lines, we are in fact witnessing the emergence of a new AI era beyond generative AI: cognitive AI. Machines with cognitive intelligence can do wonders in many real-world applications, including education, healthcare, and career development.

Today, cognitive AI can benefit two areas directly and immediately: translating information or messages based on user characteristics to aid information comprehension and persuasion, and generating new experiences based on that of like-minded people.

FOUND IN TRANSLATION

Every human being is unique, and individual differences make people see, hear, and comprehend the world’s information differently. If AI can “translate” information in a form that a person can better relate to and comprehend, the world would be a better place.

One example is in education where many students are struggling with STEM subjects. Imagine an AI tutor that can “translate” course materials and generate quizzes—with examples or situations that a student is familiar with or can easily relate to—based on their unique psychographic characteristics, such as interests (say, sports), cognitive style (preferring visual instructions), and personality (extrovert). This personalization can then stimulate students’ learning interests and motivate interested students to learn more and better.

In healthcare, many people have difficulty digesting clinical information or following medical advice. Imagine an AI care assistant that can “translate” healthcare information or advice by using analogies and stories generated based on a person’s unique psychographic characteristics, such as their temperament (for example, impulsive and self-conscious), motivation (taking care of family), and passion (say, gardening). The following example is AI-generated, human-edited healthcare advice on obesity, tailored to a person who is a family-oriented, avid gardener.

Not only will this personalization help patients better comprehend healthcare information and guidance, but it could also motivate them to stay healthy, improving their overall care experience and outcomes.

EXPERIENCE ADAPTATION

A person’s experience is often limited due to various constraints, such as time, geographic, and financial restrictions. If AI can present potential new experiences, it could open up people’s minds as well as help them discover opportunities for optimizing their well-being.

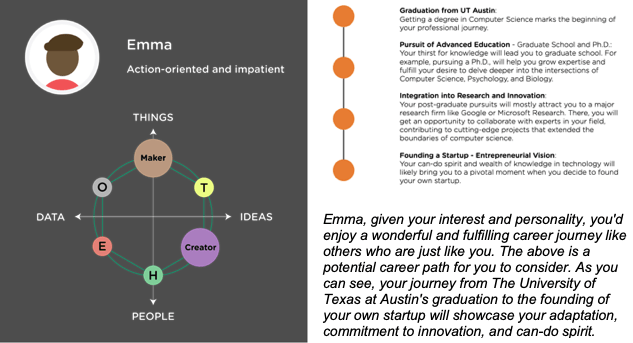

In career development, few individuals, especially young people, have a clear idea of their future career. Imagine that an AI career counselor can understand every student’s passions, interests, talents, and skills, as well as how they handle life’s challenges. It can then help them plot and explore their unique, potential career experiences and possibilities based on the careers of other professionals who are just like them. The AI career counselor can also show students how to best capture these opportunities and maximize their potential. Below is an AI-generated, human-edited potential career experience for a young lady called Emma, who is a freshman studying computer science at the University of Texas at Austin.

Likewise, patients in a healthcare setting dealing with illness can also benefit from this technology. Imagine an AI care assistant that can deeply understand each patient, including their psychographic characteristics. It can give them insight into what their healthcare journey may look like based on the experiences of other patients who are just like them. This “experience transfer” or “experience adaptation” can give patients hope and encourage them to adhere to treatment, improving overall healthcare outcomes.

TAKEAWAYS

In order for cognitive AI to really work, we’ll need to bound the tech to a few conditions:

- AI will need rich and accurate knowledge, such as the real people’s experiences.

- AI will need a deep understanding of users, including psychographic characteristics.

- We’ll need human experts in the loop to amend machine “incorrections” or mistakes.

We are only at the beginning of a new AI era, which has already opened the door to many possibilities that we could not have imagined just a few years ago. Hallucinations may not be a hiccup, per se; they could be the key to the next stage of AI.

Note: A version of this article is published on Fast Company