ChatGPT and similar AI applications have given humankind a new tool. While this new tool is powerful, it may not always be reliable. Hence the term “AI hallucinations” is coined to refer to such unreliable AI performance.

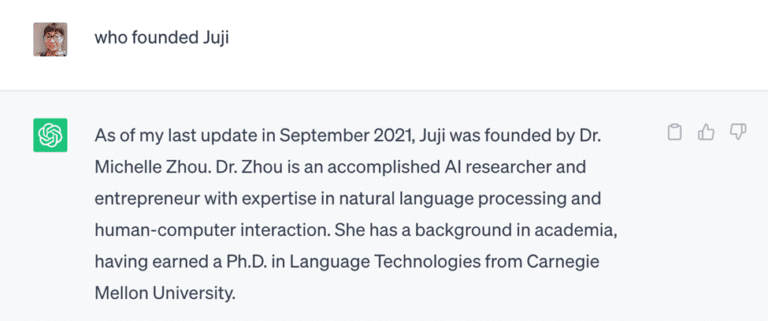

Here is an example. I asked ChatGPT, “Who founded Juji“, the AI startup I co-founded. It hallucinated with the following reply:

It got several facts wrong including my education. I received my Ph.D. from Columbia University, not Carnegie Mellon University. Moreover, Juji was co-founded by Dr. Huahai Yang and me, not just myself.

As the medical community considers the role of generative AI, it begs the question: Can a hallucinated AI still help with high-stakes applications such as patient engagement? The short answer is yes if hallucinated AI is appropriately used.

Customize an AI Chatbot with Accurate Information

One cause behind AI hallucinations is that applications like ChatGPT are trained on public data, which may lack accurate information. For example, if ChatGPT has Juji’s proprietary data on its founding history and founder information, it probably won’t make a mistake as seen above. Similarly, reliance on trustworthy healthcare information is crucial for AI chatbots designed to engage patients. For example, an AI chatbot sitting on Mayo Clinic’s website should be trained with validated, up-to-date healthcare information to provide patients and their family members with accurate insights regarding specific diseases and treatments.

Moreover, such information must be updated from time to time to ensure its validity, especially in the healthcare industry when health situations (e.g., the COVID pandemic) or medical advances evolve rapidly.

Human-in-the-Loop Validation

Let’s assume that we have trained a healthcare AI chatbot with trustworthy healthcare information. Does this mean the AI chatbot won’t hallucinate anymore? The answer is, unfortunately, no. Occasionally, the AI chatbot may still hallucinate by piecing together irrelevant information.

Here is an example: I uploaded our company’s privacy policy into ChatGPT and asked: “Who should I contact regarding Juji’s privacy policy?” It told me to contact “privacy@juji.io,” an email address that doesn’t exist and has never been mentioned in our privacy policy either.

To further prevent or reduce AI hallucinations, it is thus critical to support a human-in-the-loop validation process. There are typically two types of human-in-the-loop validation: pre-use and post-use validations. I use a concrete example below to illustrate these two approaches.

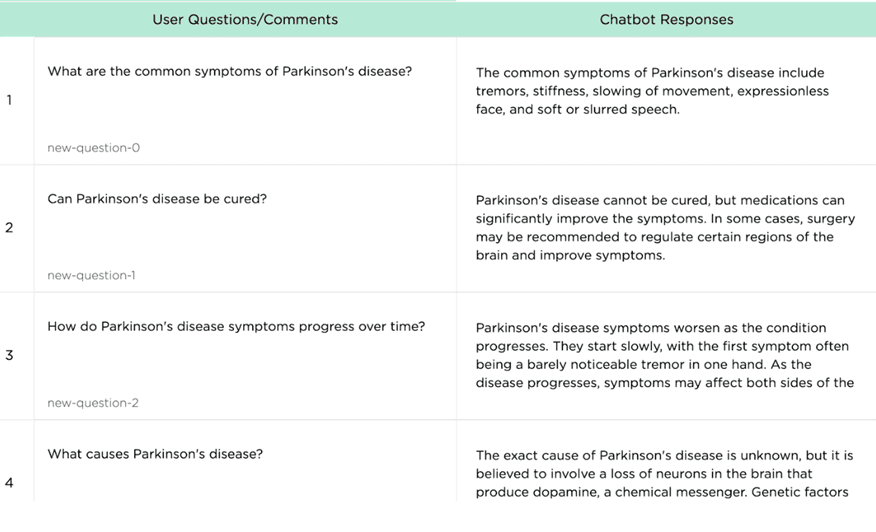

Assume that we have provided a healthcare AI chatbot with information about various diseases like Parkinson’s and Alzheimer’s.

Based on the given disease information, a pre-use validation approach automatically generates a set of possible Q&A pairs, as shown below. A healthcare professional can then examine and validate the generated results before an AI chatbot can use them to answer user questions about various diseases.

While this approach ensures that the chatbot always provides the correct information to users, it has its limitations. For example, a user asks, “What are the symptoms of Parkinson’s disease?” and then asks, “What about Alzheimer’s?” While the chatbot can answer the first question easily based on the generated Q&A pairs (see row 1 above), it could not answer the second question because the generated Q&A would not cover such context-sensitive questions. This is because the second question uses an incomplete expression and may be interpreted differently under different contexts. The above example means, “What are the symptoms of Alzheimer’s disease?” Alternatively, if the previous question were “What are the treatment options for Parkinson’s disease?”, it would mean “What are the treatment options for Alzheimer’s disease?”

On the other hand, a post-use validation approach lets an AI chatbot directly answer user questions based on given information AND the conversation context. Using the same example above, in this approach the AI chatbot can answer both questions easily. This approach will then record user-AI interactions so a human can examine the records to confirm correct answers and rectify AI hallucinations if there are any. Although this approach may produce hallucinated AI responses at the beginning, it is quite effective at reducing and preventing hallucinations over time because it not only corrects detected hallucinations but also continuously provides verified answers to the chatbot.

No matter which approach is utilized, it is critical to involve subject matter experts (healthcare professionals in the case of patient engagement chatbots) in such a validation process.

Patient Engagement is Beyond just Q&A

Currently, when considering AI chatbots for patient engagement, AI chatbots are mostly used to answer patient questions. Given the power of AI technologies, AI chatbots can be used beyond just patient Q&A. For example, studies show that cognitive AI chatbots can now infer a person’s personality, such as interests, needs, and temperament, from a conversation. In this light, AI chatbots can engage patients in a genuine two-way conversation, understanding their needs, addressing their concerns, and guiding them to the most appropriate treatment options.

Consider an AI chatbot for mental health. In addition to answering patient questions regarding mental wellness, such as improving sleep quality, it can engage with a patient in an empathetic conversation, automatically inferring the patient’s personality from their conversation and sharing a story that matches the patient’s personality to encourage the patient to maintain their wellbeing. In this case, AI “hallucinations” used to create a story with a fictional character that matches the patient’s inferred personality will be considered valuable because it will make the patient feel being heard and connected through a relatable experience.

Takeaways

Generative AI technologies like those behind ChatGPT are powerful at understanding human languages and composing natural responses. Though hallucinations may occur, they can significantly enhance patient engagements, when used appropriately:

Make healthcare information accessible by customizing AI chatbots with trustworthy healthcare information Further prevent or reduce AI hallucinations by supporting a human-in-the-loop validation process Use AI hallucinations along with other AI technologies (e.g., cognitive AI** to enable deeply personalized, empathetic patient engagements beyond supporting patient Q&A

Note: A version of this article is published on Healthcare IT Today